A little about our GTA V project

24 Eki 2024

Less technical to start with

The architecture of an IT system is one of the most important aspects of its development. Performance, scalability, and system security depend on it. It is important to make the right architectural choices that will suit your business and technical needs. In our case, the business needs are:

1. Performance - the server must be able to handle a large number of players at once, without performance drops.

2. Scalability – The server must be able to scale up or down depending on the needs.

3. Security - The server must be secure against hacker attacks and other threats.

4. Availability - the server must be available to players 24/7.

How does this translate into a dev?

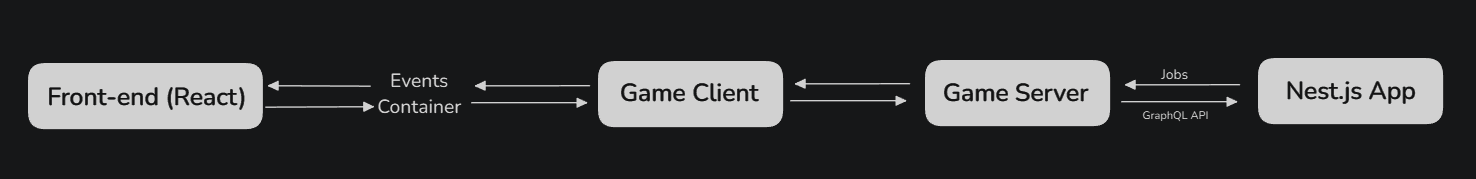

The diagram above simplifies the architecture of the entire Project:V infrastructure in a nutshell. If we were to describe the whole thing, we would have to write 10 A4 sheets. Here we focus only on elements that are directly related to the gameplay - we do not describe the backend and DevOps matters in more detail - more about this may appear in future blogs.

Main Concept

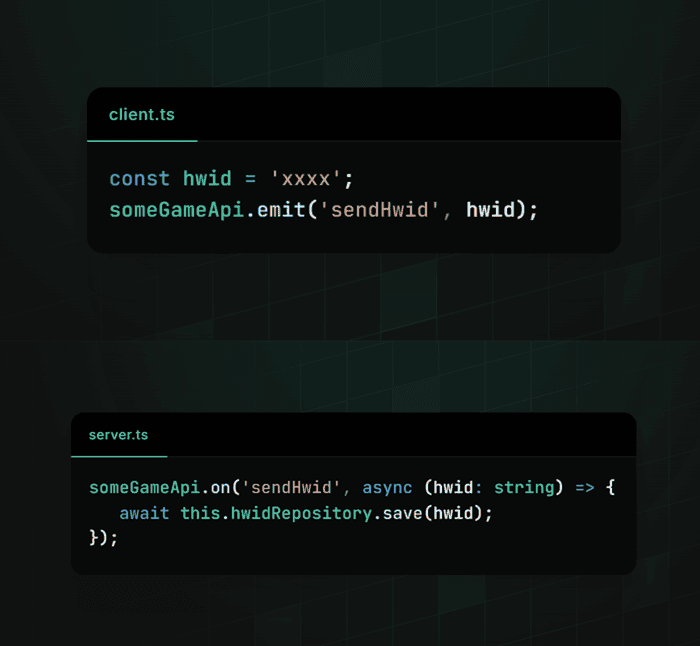

Most game servers use a standard client-server layout with UDP-based event-based communication. In simple terms, the client sends events to the server, which processes them. The server is responsible for handling native issues (game API), data persistence (database, cache, file system), data transmission (streaming) and many other things. This solution is sufficient for most applications, but in the case of large scale, optimization problems arise.

An example of such communication:

We decided on a completely different solution than the standard one. We issue a GraphQL API that is responsible for all business logic and information storage, including database, cache, and more. In fact, the game server does not store any game-critical logic. It is purely a gateway of information to the API, which is the heart of the logic in which all operations take place.

Our solution is partly an implementation of separation of concerns - the game deals only with forwarding a given request to the API, while the business logic is completely elsewhere. There is a lot of API<>GS dependency here, but it is the task of our DevOps to ensure one hundred percent up-time regardless of traffic (we take c10k as the limit of endurance). The biggest advantage in our eyes is the even distribution of the load between two completely independent instances - and if we wanted, even two independent machines or API in the cloud (AWS/GCP) - the latter is unlikely due to the extremely high costs of the cloud, which with our traffic could reach up to several thousand dollars per month.

Another advantage is primarily multitasking. On an another platforms, we often had to write multiple applications that ultimately do the same logic and operate on the same base. The game operated with the same database as the forum, admin panel. In our case, when writing a gamehub, adminhub or even a mobile application, we operate with exactly the same endpoints and the same API instance as the game, so any development is much simpler than before.

An undoubted disadvantage of such a solution is certainly the additional implementation overhead - in order to change something in the game, you first need to adapt the API to it, take care of the appropriate versioning and the appropriate flow release. Fortunately, we have teams big enough to handle it calmly.

As in any distributed architecture, there are often difficulties that do not occur in monolithic applications. The biggest problem we can encounter is, of course, the downtime API when launching the server at the same time. Fortunately, we are also prepared for this and we know how to approach this problem. The answer is multiple instances and load balancing. Load tests will certainly also tell us a lot.

The solution to the problems derived from the concept of our architecture is primarily the correct handling of HTTP clients and timeouts on time, so as to prevent buffer overflow and cascading errors. In the future, we may also think about circuit breakers, but that's a topic for a completely different blog.